Multi Arm Bandit Problem¶

Introduction¶

Row of slot machines with different probabilities of paying off? Which ones should you play often and how often?

Exploit vs Explore

Application Areas

Model for A/B Testing: Ad someone clicks or doesnot

Medical Diagnosis: Well known treatment or new treatment

Diseases Epidemic :

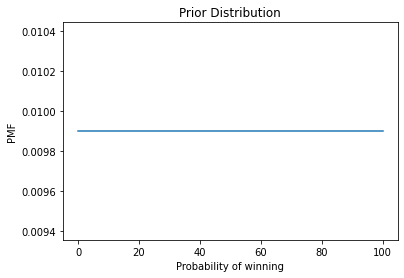

Prior¶

We are assuming uniform prior distribution here for probability

def posterior(n_w=1, n_l=9):

bandit = Pmf.from_seq(range(101))

outcomes = 'W'*n_w+"L"*n_l

bandit.plot(color='steelblue', label='Prior', linestyle="--")

for data in outcomes:

bandit.update(likelihood_bandit, data)

bandit.plot(color='steelblue', label='Posterior')

plt.legend()

decorate_bandit(title="Prior vs Posterior")

Simulate Machines Based on Given Probabilities¶

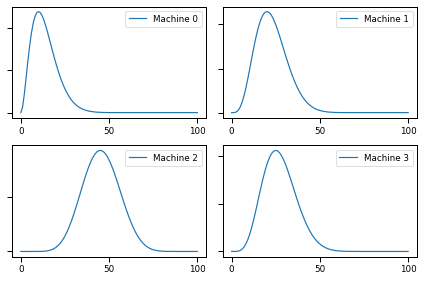

Playing machines 20 times¶

[['L', 'L', 'L', 'L'],

['L', 'W', 'L', 'W'],

['L', 'W', 'L', 'L'],

['L', 'W', 'W', 'L'],

['L', 'W', 'L', 'W'],

['L', 'L', 'L', 'L'],

['L', 'L', 'W', 'L'],

['L', 'W', 'W', 'L'],

['L', 'L', 'L', 'W'],

['W', 'W', 'L', 'L'],

['L', 'L', 'L', 'L'],

['L', 'L', 'W', 'L'],

['W', 'W', 'W', 'L'],

['L', 'W', 'L', 'L'],

['L', 'W', 'W', 'W'],

['L', 'W', 'L', 'W'],

['L', 'W', 'W', 'L'],

['W', 'L', 'L', 'L'],

['L', 'L', 'L', 'L'],

['L', 'L', 'L', 'L']]

[0 0.009901

1 0.009901

2 0.009901

3 0.009901

4 0.009901

...

96 0.009901

97 0.009901

98 0.009901

99 0.009901

100 0.009901

Length: 101, dtype: float64,

0 0.009901

1 0.009901

2 0.009901

3 0.009901

4 0.009901

...

96 0.009901

97 0.009901

98 0.009901

99 0.009901

100 0.009901

Length: 101, dtype: float64,

0 0.009901

1 0.009901

2 0.009901

3 0.009901

4 0.009901

...

96 0.009901

97 0.009901

98 0.009901

99 0.009901

100 0.009901

Length: 101, dtype: float64,

0 0.009901

1 0.009901

2 0.009901

3 0.009901

4 0.009901

...

96 0.009901

97 0.009901

98 0.009901

99 0.009901

100 0.009901

Length: 101, dtype: float64]

options = dict(xticklabels='invisible', yticklabels='invisible')

def plot(beliefs,label_pre='Prior',**options):

sns.set_context('paper')

for i, b in enumerate(beliefs):

plt.subplot(2,2, i+1, label=f"{label_pre}{i}")

b.plot(label=f"Machine {i}")

plt.gca().set_yticklabels([])

plt.legend()

plt.tight_layout()

sns.set_context('talk')

prior = range(101)

counter = Counter()

def flip(p):

return random()<p

def play(i):

counter[i] += 1

p = actual_probs[i]

if flip(p):

return 'W'

else:

return 'L'

def update(beliefs, i, outcome):

beliefs[i].update(likelihood_bandit, outcome)

beliefs = [Pmf.from_seq(prior) for i in range(4)]

# beliefs

plot(beliefs, label_pre='Prior')

for i in range(20):

for j in range(4):

update(beliefs, j, play(j))

plot(beliefs, label_pre='Posterior')

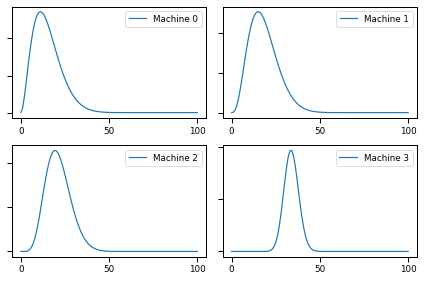

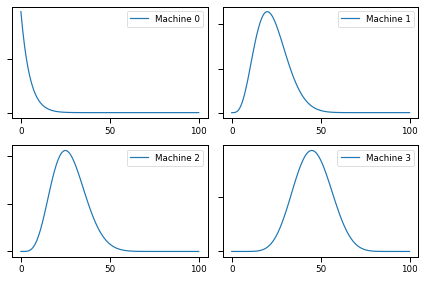

Bayesian Bandit¶

Idea is to choose best course of action while running the experiment/ simulation

Choice internally call np.random.choice on quantities

array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12,

13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25,

26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38,

39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51,

52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63, 64,

65, 66, 67, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77,

78, 79, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89, 90,

91, 92, 93, 94, 95, 96, 97, 98, 99, 100])