Lecture2: Evidence and p-value¶

Introduction¶

Synopsis: What did we learn in this lecture¶

ML Problems: Classification & Regression

Validation Set, Overfitting and hints of selecting a representative set

Metrics vs Loss [Important question on overfitting with respect to training loss going down and validation loss going up to indicate overfitting ]

Not always true : Follow the metric not loss

Transfer Learning and Fine-tuning

Why Transfer Learning Works (Zeller & Fergues viz paper)

State of DL now [What works and what doesn’t]

Filter/ Feature & catostrophic forgetting [ if you want your model to keep performing on old data => use old(Samples)+ new in training]

Model Zoo

Interpreting p-value , utilities

Almost always confuse the issue

Multivariate p-value more robust[ t-statistics]?

choose reverse hypothesis as null hypothesis and see if you have sufficient data . Otherwise no decision can be made

Drive train approach

Objectives => Levers => Data Collection => Model

Strategy(Sources of value, Levers)=> Data(Availiability, Suitability)=>Analytics (Predictions, Insights) => Implementation(IT, Human Capital) => Maintenance(Environment Changes)

Identify & Manage Constraints across spectrum

Prior Belief, Evidence and Utility View

Data Curation

Bing image search

L object

verify_images

Datablock Api

blocks

get_x, get_y

item_tfms

Model Export

Inference

Covid Paper

Seasonality

Transmissibility

Group of cities

p-value

Visualization

How to read paper & criticism

Implementation Plan¶

Curate Dataset

Apply Datablock api on multiple datasets

Explore more on p-value, t2 stats

Read Drivetrain paper in detail and understand modeler, simulator and optimizer concepts

Datablock Api¶

PETS problem using datablock api¶

| epoch | train_loss | valid_loss | error_rate | accuracy | time |

|---|---|---|---|---|---|

| 0 | 3.252484 | 2.097097 | 0.566644 | 0.433356 | 07:20 |

| 1 | 2.311346 | 1.852842 | 0.519621 | 0.480379 | 08:34 |

| 2 | 2.041009 | 1.734501 | 0.502030 | 0.497970 | 08:36 |

| epoch | train_loss | valid_loss | error_rate | accuracy | time |

|---|

Data Curation¶

Google¶

Datasets¶

Bears¶

(#425) [Path('bears/teddy/00000046.jpg'),Path('bears/teddy/00000119.jpg'),Path('bears/teddy/00000041.jpg'),Path('bears/teddy/00000090.jpg'),Path('bears/teddy/00000117.jpg'),Path('bears/teddy/00000069.png'),Path('bears/teddy/00000033.jpg'),Path('bears/teddy/00000034.jpg'),Path('bears/teddy/00000110.jpg'),Path('bears/teddy/00000048.jpg')...]

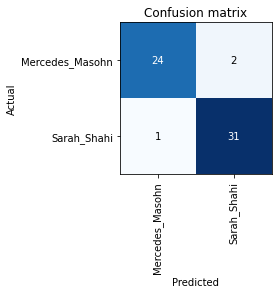

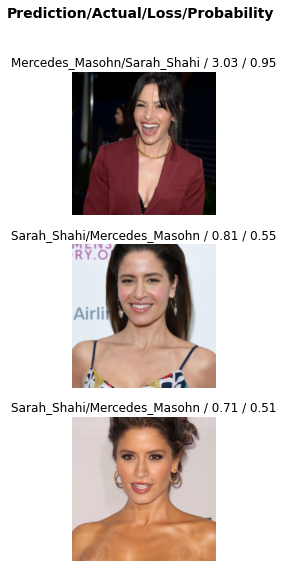

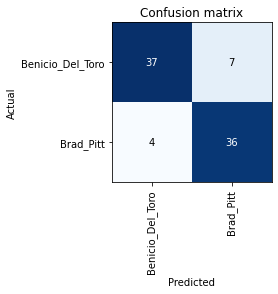

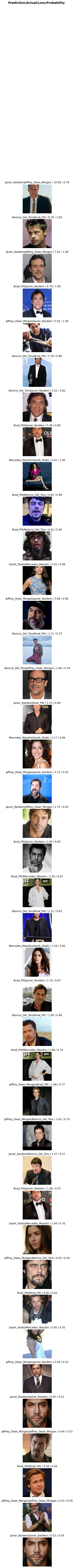

DoppelGanger¶

# def construct_image_dataset(clstypes, dest,key=key, count=150):

# path = Path(dest)

# if not path.exists():

# path.mkdir(exist_ok=True)

# for o in clstypes:

# d = o.replace(" ", "_")

# dest = (path/d)

# print(f"Dowloading images in {d}")

# dest.mkdir(exist_ok=True)

# results = search_images_bing(key, o, count=count)

# download_images(dest, urls=results.attrgot("content_url"))

# print(f"Finished Dowloading images in {d}")

# for i in range(3):

# fns = get_image_files(path)

# failed = verify_images(fns)

# print(failed)

# failed.map(Path.unlink)

# return path

| epoch | train_loss | valid_loss | error_rate | accuracy | time |

|---|---|---|---|---|---|

| 0 | 1.023837 | 0.849942 | 0.431035 | 0.568965 | 00:15 |

| 1 | 1.047065 | 0.581088 | 0.327586 | 0.672414 | 00:21 |

| 2 | 0.912259 | 0.429392 | 0.189655 | 0.810345 | 00:21 |

| 3 | 0.820823 | 0.318312 | 0.137931 | 0.862069 | 00:20 |

| 4 | 0.715490 | 0.220695 | 0.120690 | 0.879310 | 00:22 |

| epoch | train_loss | valid_loss | error_rate | accuracy | time |

|---|---|---|---|---|---|

| 0 | 0.283033 | 0.150566 | 0.017241 | 0.982759 | 00:24 |

| 1 | 0.237740 | 0.141855 | 0.051724 | 0.948276 | 00:27 |

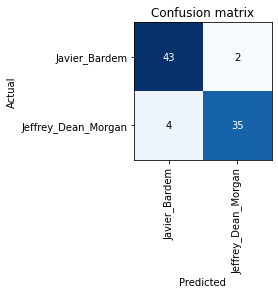

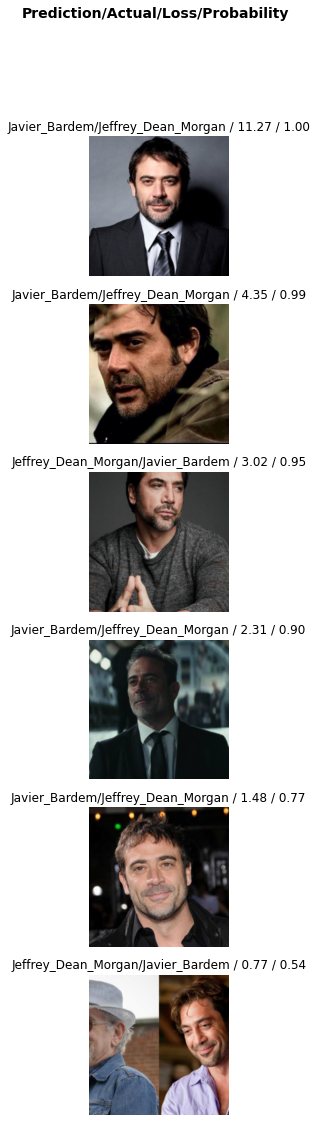

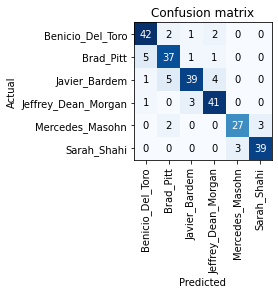

DoppelGanger2¶

(#13) [Path('Doppelganger2/Jeffrey_Dean_Morgan/00000041.jpg'),Path('Doppelganger2/Jeffrey_Dean_Morgan/00000063.jpg'),Path('Doppelganger2/Jeffrey_Dean_Morgan/00000111.jpg'),Path('Doppelganger2/Jeffrey_Dean_Morgan/00000116.jpg'),Path('Doppelganger2/Jeffrey_Dean_Morgan/00000007.jpg'),Path('Doppelganger2/Javier_Bardem/00000027.jpg'),Path('Doppelganger2/Javier_Bardem/00000029.jpg'),Path('Doppelganger2/Javier_Bardem/00000034.jpg'),Path('Doppelganger2/Javier_Bardem/00000041.jpg'),Path('Doppelganger2/Javier_Bardem/00000065.jpg')...]

| epoch | train_loss | valid_loss | accuracy | error_rate | time |

|---|---|---|---|---|---|

| 0 | 1.216884 | 0.837433 | 0.571429 | 0.428571 | 00:25 |

| 1 | 1.125102 | 0.537283 | 0.785714 | 0.214286 | 00:25 |

| 2 | 0.942884 | 0.531028 | 0.821429 | 0.178571 | 00:27 |

| 3 | 0.814877 | 0.549914 | 0.833333 | 0.166667 | 00:24 |

| 4 | 0.693545 | 0.521625 | 0.857143 | 0.142857 | 00:24 |

| epoch | train_loss | valid_loss | accuracy | error_rate | time |

|---|---|---|---|---|---|

| 0 | 0.247736 | 0.401182 | 0.892857 | 0.107143 | 00:30 |

| 1 | 0.171536 | 0.322162 | 0.916667 | 0.083333 | 00:30 |

| 2 | 0.128251 | 0.308142 | 0.928571 | 0.071429 | 00:30 |

Doppelganger3¶

Download of https://dailystormer.name/wp-content/uploads/2019/11/brad-pitt-2.jpg has failed after 5 retries

Fix the download manually:

$ mkdir -p Doppelganger3/Brad_Pitt

$ cd Doppelganger3/Brad_Pitt

$ wget -c https://dailystormer.name/wp-content/uploads/2019/11/brad-pitt-2.jpg

$ tar xf brad-pitt-2.jpg

And re-run your code once the download is successful

Finished Dowloading images in Brad_Pitt

(#14) [Path('Doppelganger3/Brad_Pitt/00000040.jpg'),Path('Doppelganger3/Brad_Pitt/00000149.jpg'),Path('Doppelganger3/Brad_Pitt/00000125.jpg'),Path('Doppelganger3/Brad_Pitt/00000059.jpg'),Path('Doppelganger3/Brad_Pitt/00000130.jpg'),Path('Doppelganger3/Brad_Pitt/00000137.jpg'),Path('Doppelganger3/Brad_Pitt/00000013.jpg'),Path('Doppelganger3/Brad_Pitt/00000037.jpg'),Path('Doppelganger3/Brad_Pitt/00000138.jpg'),Path('Doppelganger3/Brad_Pitt/00000060.jpg')...]

| epoch | train_loss | valid_loss | error_rate | accuracy | time |

|---|---|---|---|---|---|

| 0 | 1.391564 | 0.935234 | 0.464286 | 0.535714 | 00:25 |

| 1 | 1.172075 | 0.878080 | 0.452381 | 0.547619 | 00:24 |

| 2 | 1.053020 | 0.914704 | 0.392857 | 0.607143 | 00:25 |

| 3 | 0.870856 | 0.782933 | 0.297619 | 0.702381 | 00:24 |

| 4 | 0.721680 | 0.565612 | 0.238095 | 0.761905 | 00:24 |

| epoch | train_loss | valid_loss | error_rate | accuracy | time |

|---|---|---|---|---|---|

| 0 | 0.180159 | 0.497945 | 0.190476 | 0.809524 | 00:29 |

| 1 | 0.166392 | 0.430486 | 0.142857 | 0.857143 | 00:30 |

| 2 | 0.134516 | 0.393905 | 0.130952 | 0.869048 | 00:29 |

DoppelgangerMixed¶

| epoch | train_loss | valid_loss | accuracy | error_rate | time |

|---|---|---|---|---|---|

| 0 | 0.093747 | 0.474693 | 0.830116 | 0.169884 | 00:27 |

| 1 | 0.105986 | 0.514394 | 0.818533 | 0.181467 | 00:25 |

| 2 | 0.120812 | 0.532366 | 0.849421 | 0.150579 | 00:27 |

| 3 | 0.122049 | 0.643733 | 0.826255 | 0.173745 | 00:26 |

| epoch | train_loss | valid_loss | accuracy | error_rate | time |

|---|---|---|---|---|---|

| 0 | 0.096170 | 0.538056 | 0.837838 | 0.162162 | 00:32 |

| 1 | 0.085646 | 0.545626 | 0.857143 | 0.142857 | 00:29 |

| 2 | 0.075069 | 0.524330 | 0.872587 | 0.127413 | 00:31 |

| 3 | 0.074985 | 0.526975 | 0.868726 | 0.131274 | 00:28 |