Lay of Land - Spook Author Identification¶

Lets’ start by getting a lay of land using a code first approach. Idea is to quickly jump into training NLP models using clean datasets from Kaggle. I am going to follow this wonderful kernel from Abhishek Thakur,a prolific Kaggle GM, for Spooky Author Classification Competition.

Note

Whenever we start a kaggle competition it is useful to look at evaluation metric first. Real life project are not like Kaggle. In real world following activities happen before

Identify a business problem

Clarify and refine the problem and convert the same into ML problem.

Collect relevant datasets

Preprocess and clean the dataset

Define an evaluation metric relevant for value proposition

However, when we are learning it might be useful to start with a clean dataset with evaluation criteria defined; so that we can concentrate on learning modelling skills

Imports¶

It is better to keep all imports at the top of notebook.

import numpy as np

import pandas as pd

import matplotlib.pyplot as plot

import xgboost as xgb

from sklearn.preprocessing import LabelEncoder, StandardScaler

from sklearn.model_selection import train_test_split, GridSearchCV

from sklearn.feature_extraction.text import TfidfVectorizer, CountVectorizer

from sklearn.linear_model import LogisticRegression

from sklearn.naive_bayes import MultinomialNB

from sklearn.svm import SVC

from sklearn.decomposition import TruncatedSVD

from sklearn import metrics, pipeline

from fastai.basics import *

from nlphero.data.external import *

Simple EDA¶

First we should check out the data

| id | text | author | |

|---|---|---|---|

| 0 | id26305 | This process, however, afforded me no means of ascertaining the dimensions of my dungeon; as I might make its circuit, and return to the point whence I set out, without being aware of the fact; so perfectly uniform seemed the wall. | EAP |

| 1 | id17569 | It never once occurred to me that the fumbling might be a mere mistake. | HPL |

| 2 | id11008 | In his left hand was a gold snuff box, from which, as he capered down the hill, cutting all manner of fantastic steps, he took snuff incessantly with an air of the greatest possible self satisfaction. | EAP |

| 3 | id27763 | How lovely is spring As we looked from Windsor Terrace on the sixteen fertile counties spread beneath, speckled by happy cottages and wealthier towns, all looked as in former years, heart cheering and fair. | MWS |

| 4 | id12958 | Finding nothing else, not even gold, the Superintendent abandoned his attempts; but a perplexed look occasionally steals over his countenance as he sits thinking at his desk. | HPL |

| id | EAP | HPL | MWS | |

|---|---|---|---|---|

| 0 | id02310 | 0.403494 | 0.287808 | 0.308698 |

| 1 | id24541 | 0.403494 | 0.287808 | 0.308698 |

| 2 | id00134 | 0.403494 | 0.287808 | 0.308698 |

| 3 | id27757 | 0.403494 | 0.287808 | 0.308698 |

| 4 | id04081 | 0.403494 | 0.287808 | 0.308698 |

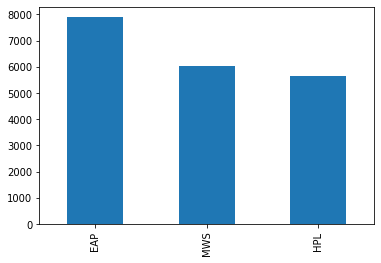

So we have roughly 20k rows and about 8.4K test data (41% of size of train data). Classes are more or less equally distributed. We need to predict probabilities of different authors.

Evaluation Metric¶

For this competition, kaggle has defined multi class log loss as evaluation metric defined in link here. What does it mean ?

For each id ; we must predict probability of each author

Formulae for evaluation is defined as

Modelling¶

TF-IDF with Logistic Regression¶

Term frequency inverse document frequency

A good reference can be found here

{TF→Frequency of term in a document} indicates if you are using the word too many time in a document or too little.

{IDF→Inverse document Frequency} of a word is the measure of how significant that term is in the whole corpus.

TF.IDF(t,d)=Wdt=TFdtlnNDFt

N=Total number of documents

DFt=Number of document with term t

Put simply, the higher the TF.IDF score (weight), the rarer the term and vice versa.

def multiclass_logloss2(actual, predicted, eps=1e-15):

"""Multi class version of Logarithmic Loss metric.

:param actual: Array containing the actual target classes

:param predicted: Matrix with class predictions, one probability per class

"""

# Convert 'actual' to a binary array if it's not already:

if len(actual.shape) == 1:

actual2 = np.zeros((actual.shape[0], predicted.shape[1]))

for i, val in enumerate(actual):

actual2[i, val] = 1

actual = actual2

clip = np.clip(predicted, eps, 1 - eps)

rows = actual.shape[0]

vsota = np.sum(actual * np.log(clip))

return -1.0 / rows * vsota

MultinomialNB¶

def check_score(clf, vectorizer,

x_train=x_train, x_valid=x_valid,

y_train=y_train, y_valid=y_valid):

vectorizer.fit(np.concatenate([x_train['text'].values,

x_valid['text'].values]))

x_train_vect = vectorizer.transform(x_train['text'].values)

x_valid_vect = vectorizer.transform(x_valid['text'].values)

clf.fit(x_train_vect, y_train)

val_preds = clf.predict_proba(x_valid_vect)

mcll = multiclass_logloss(y_valid, val_preds)

return mcll

XGBoost¶

CountVectorizer¶

clf = xgb.XGBClassifier(max_depth=7,

n_estimators=200,

colsample_bytree=0.8,

subsample=0.8,

nthread=10,

learning_rate=0.1)

vectorizer = CountVectorizer(analyzer='word',

token_pattern=r'\w{1,}',

ngram_range=(1,3),

stop_words='english'

)

score = check_score(clf, vectorizer)

print ("logloss: %0.3f " % score)

Tf-idfVectorizer¶

clf = xgb.XGBClassifier(max_depth=7,

n_estimators=200,

colsample_bytree=0.8,

subsample=0.8,

nthread=10,

learning_rate=0.1)

vectorizer = TfidfVectorizer(min_df=1,

max_features=None,

strip_accents="unicode",

analyzer='word',

token_pattern=r'\w{1,}',

ngram_range=(1,3),

use_idf=1,

smooth_idf=1,

sublinear_tf=1,

stop_words='english'

)

score = check_score(clf, vectorizer)

print ("logloss: %0.3f " % score)

SVD Transformation¶

Grid Search¶

Doing Grid Search using Logistic Regression

GridSearchCV(cv=2,

estimator=Pipeline(steps=[('svd', TruncatedSVD()),

('scl', StandardScaler()),

('lr', LogisticRegression())]),

iid=True, n_jobs=-1,

param_grid={'lr__C': [0.1, 1.0, 10], 'lr__penalty': ['l1', 'l2'],

'svd__n_components': [120, 180]},

scoring=make_scorer(multiclass_logloss, greater_is_better=False, needs_proba=True),

verbose=10)

[Parallel(n_jobs=-1)]: Using backend LokyBackend with 32 concurrent workers.

[Parallel(n_jobs=-1)]: Done 1 tasks | elapsed: 1.5min

[Parallel(n_jobs=-1)]: Done 3 out of 24 | elapsed: 1.5min remaining: 10.7min

[Parallel(n_jobs=-1)]: Done 6 out of 24 | elapsed: 1.6min remaining: 4.7min

[Parallel(n_jobs=-1)]: Done 9 out of 24 | elapsed: 1.6min remaining: 2.7min

[Parallel(n_jobs=-1)]: Done 12 out of 24 | elapsed: 1.6min remaining: 1.6min

[Parallel(n_jobs=-1)]: Done 15 out of 24 | elapsed: 2.2min remaining: 1.3min

[Parallel(n_jobs=-1)]: Done 18 out of 24 | elapsed: 2.2min remaining: 43.9s

[Parallel(n_jobs=-1)]: Done 21 out of 24 | elapsed: 2.2min remaining: 18.9s

[Parallel(n_jobs=-1)]: Done 24 out of 24 | elapsed: 2.2min remaining: 0.0s

[Parallel(n_jobs=-1)]: Done 24 out of 24 | elapsed: 2.2min finished

/home/ubuntu/anaconda3/envs/nlphero/lib/python3.8/site-packages/sklearn/model_selection/_search.py:847: FutureWarning: The parameter 'iid' is deprecated in 0.22 and will be removed in 0.24.

warnings.warn(

GridSearchCV(cv=2,

estimator=Pipeline(steps=[('svd', TruncatedSVD()),

('scl', StandardScaler()),

('lr', LogisticRegression())]),

iid=True, n_jobs=-1,

param_grid={'lr__C': [0.1, 1.0, 10], 'lr__penalty': ['l1', 'l2'],

'svd__n_components': [120, 180]},

scoring=make_scorer(multiclass_logloss, greater_is_better=False, needs_proba=True),

verbose=10)

model = GridSearchCV(estimator=clf,

param_grid=param_grid,

scoring=mll_scorer,

verbose=10,

n_jobs=-1,

iid=True,

refit=True,

cv=2)

model.fit(x_train_tfv, y_train)

print(f"Best Score: {model.best_score_:.3}")

print("Best parameters set:")

best_parameters = model.best_estimator_.get_params()

for param_name in sorted(param_grid.keys()):

print(param_name, best_parameters[param_name])

[Parallel(n_jobs=-1)]: Using backend LokyBackend with 32 concurrent workers.

[Parallel(n_jobs=-1)]: Done 1 tasks | elapsed: 1.4min

[Parallel(n_jobs=-1)]: Done 3 out of 24 | elapsed: 1.5min remaining: 10.3min

[Parallel(n_jobs=-1)]: Done 6 out of 24 | elapsed: 1.5min remaining: 4.6min

[Parallel(n_jobs=-1)]: Done 9 out of 24 | elapsed: 1.6min remaining: 2.7min

[Parallel(n_jobs=-1)]: Done 12 out of 24 | elapsed: 1.6min remaining: 1.6min

[Parallel(n_jobs=-1)]: Done 15 out of 24 | elapsed: 2.2min remaining: 1.3min

[Parallel(n_jobs=-1)]: Done 18 out of 24 | elapsed: 2.2min remaining: 43.9s

[Parallel(n_jobs=-1)]: Done 21 out of 24 | elapsed: 2.2min remaining: 18.9s

[Parallel(n_jobs=-1)]: Done 24 out of 24 | elapsed: 2.2min remaining: 0.0s

[Parallel(n_jobs=-1)]: Done 24 out of 24 | elapsed: 2.2min finished

/home/ubuntu/anaconda3/envs/nlphero/lib/python3.8/site-packages/sklearn/model_selection/_search.py:847: FutureWarning: The parameter 'iid' is deprecated in 0.22 and will be removed in 0.24.

warnings.warn(